Robotics technology advances rapidly and attracts global attention. Walking with robot dogs and having humanoids help with housework no longer feels distant.

The next step matters now. How do people work with robots? How do robots cooperate with other robots? This report examines the answers through OpenMind.

Key Takeaways

OpenMind develops the open-source runtime ‘OM1’. OM1 creates an environment where all robots communicate and cooperate freely, regardless of manufacturer.

OpenMind’s blockchain network ‘FABRIC’ establishes robot identity verification, transaction records, and distributed verification systems. FABRIC forms the foundation for an autonomous machine economy.

OpenMind uses the ERC-7777 standard to define robot behavioral rules. OpenMind is working on a ‘Physical AI Safety Layer’ with AIM Intelligence. Together, these will prevent malfunctions and block external attacks.

1. Robotics Is Growing Up Faster Than You Think

Robotics no longer belongs to the distant future or serves only a select few.

Just a few years ago, robots appeared only in labs or industrial sites. Now they step into our daily lives. People walking with robot dogs in the park or humanoids helping with household chores are no longer scenes from science fiction movies.

1X Technologies recently unveiled ‘Neo‘, a household humanoid that brings this reality closer. Consumers can now own a personal domestic assistant robot for a $499 monthly subscription or $20,000 upfront. The price remains steep, but the significance is clear: robotics technology has reached consumer homes.

Beyond Neo, global companies accelerate innovation through intense competition. Notable players include Figure, Tesla, and Boston Dynamics from the United States, and Unitree from China. Tesla plans to mass-produce its humanoid ‘Optimus’ starting in 2026 and price it below its vehicles.

The robotics industry expands rapidly into the consumer market. What seemed like a distant future arrives faster than expected and opens doors to a new everyday reality.

2. Robotics in Daily Life: Possibilities and Limitations

What changes can robotics technology bring to our daily lives? Let’s imagine a future where we live with robots.

Neo cleans the house. Unitree’s robot dog plays with the children. Optimus goes to the mart and shops for dinner ingredients. Each robot divides tasks and handles them simultaneously. Users experience far more efficient days.

Let’s take this one step further. What if robots cooperate to handle complex tasks together?

Optimus shops at the mart. Neo checks the refrigerator and requests additional ingredients from Optimus. Figure adjusts the recipe based on the user’s allergy information. Each robot connects in real-time and operates organically as one team. The user simply commands, “I want omurice.”

But this remains a distant dream. Robots lack sufficient intelligence to respond flexibly to situations. A bigger problem exists. Each robot operates within closed systems based on different technology stacks.

Robots from different manufacturers struggle to exchange data or cooperate smoothly. iPhones share photos via AirDrop with each other but cannot AirDrop to Samsung Galaxy phones. Robots face the same limitation.

Of course, cooperation is possible under limited conditions like Figure’s Helix: same manufacturer, same technology stack.

But reality presents more complexity. Look at the current robotics industry. Diverse robots explode onto the market, mirroring the Cambrian explosion.

Future users will select various robots based on their preferences and needs rather than sticking to one brand. Our homes today prove this pattern. We choose Samsung refrigerators, LG washing machines, and Dyson vacuum cleaners.

Now imagine robots from multiple manufacturers working together in one home. A kitchen robot cooks. A cleaning robot mops the floor. The two robots cannot share location information. Even if they share data, they cannot interpret it properly. Their distance calculation methods and measurement units differ.

They fail to track each other’s movement paths. Collision occurs. This is a simple example. More robots and complex tasks magnify the risks of confusion and collision.

3. Openmind: Building a World Where Robots Work Together

OpenMind emerges to solve these problems.

OpenMind breaks free from closed technology stacks and pursues an open ecosystem where all robots work together. This approach enables robots from different manufacturers to communicate and cooperate freely.

OpenMind presents two core foundations to realize this vision. First, ‘OM1‘ serves as an open-source runtime for robots. OM1 provides a standardized communication method that enables all robots to understand and cooperate with each other despite different hardware.

Second, ‘FABRIC’ operates as a blockchain-based network. FABRIC builds a trustworthy collaboration environment between robots. These two technologies create an ecosystem where all robots operate organically as one team, regardless of manufacturer.

3.1. OM1: Makes Robots Smarter and More Flexible

As we saw earlier, existing robots remain trapped in closed systems and struggle to communicate with each other.

More specifically, robots exchange information through binary data or structured code formats. These formats vary by manufacturer and block compatibility. For example, Company A’s robot expresses location as (x, y, z) coordinates while Company B defines it as (lat, long, height). Even in the same space, they fail to understand each other’s positions. Each manufacturer uses different data structures and formats.

OpenMind solves this problem through ‘OM1’, an open-source runtime. Think of it like Android, which operates on all devices regardless of manufacturer. OM1 works the same way and enables all robots to communicate in the same language regardless of hardware.

OM1 makes robots understand and process information based on natural language. OpenMind’s paper “A Paragraph is All It Takes” explains this well. Robot communication needs no complex commands or formats. A single paragraph of natural language context enables mutual understanding and cooperation.

Now let’s examine how OM1 operates in detail.

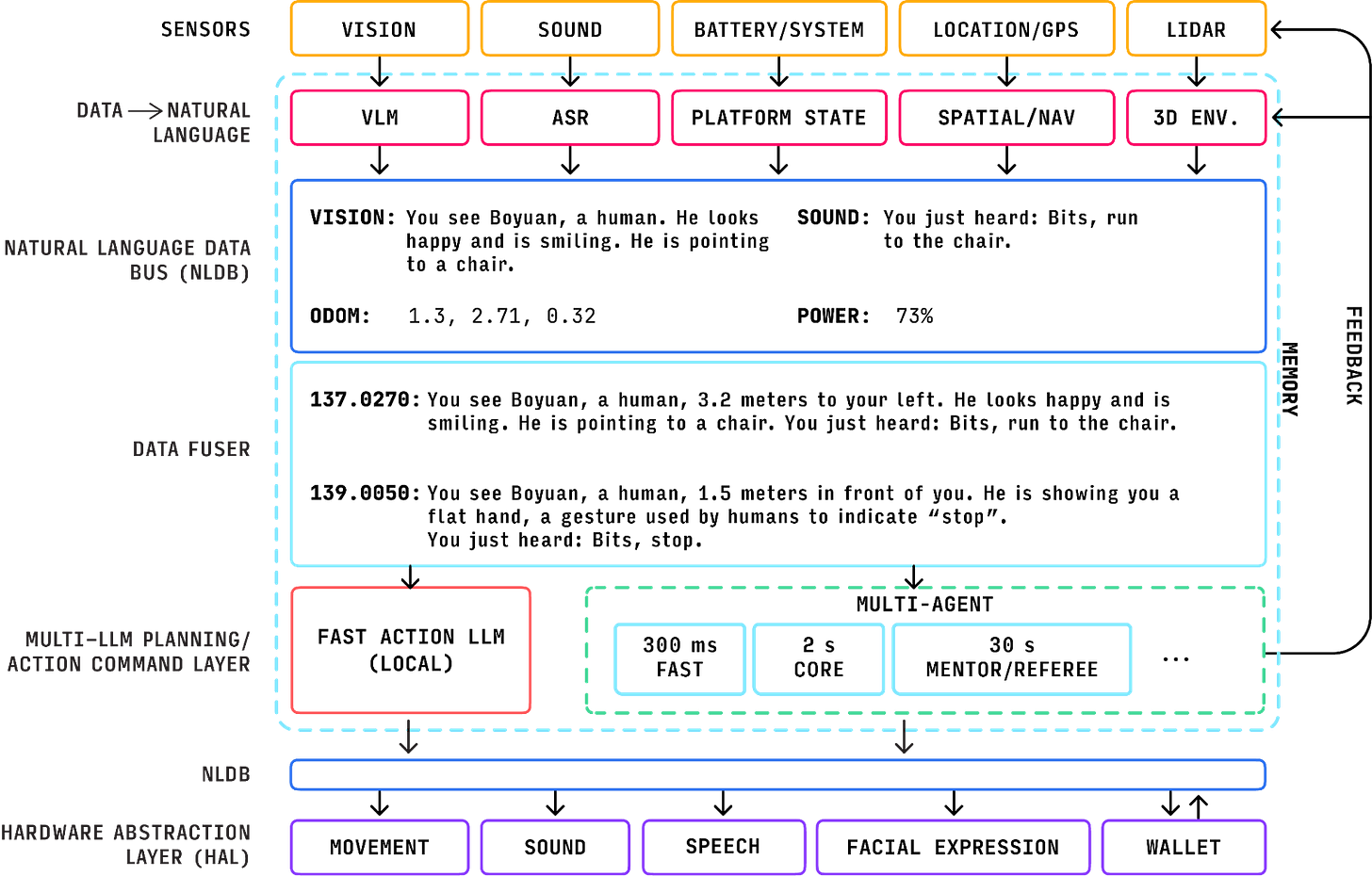

First, robots collect environmental information from various sensor modules like cameras and microphones. This data enters as binary format but multimodal recognition models convert it to natural language. VLM (Vision Language Model) processes visual information. ASR (Automatic Speech Recognition) handles audio. This generates sentences like “A man points at the chair in front” and “The user said ‘go to the chair’.”

The converted sentences gather through the Natural Language Data Bus. The Data Fuser weaves this information into a single situation report and delivers it to multiple LLMs. LLMs analyze the situation through this report and decide the robot’s next action.

This approach offers clear advantages. Robots from different manufacturers cooperate seamlessly. OM1 forms a natural language-based abstraction layer above hardware. Neo and Figure both understand identical natural language commands and perform the same tasks. Each manufacturer maintains its proprietary hardware and systems while OM1 enables free cooperation with other robots.

Beyond enabling cross-manufacturer cooperation, OM1 integrates other open-source models as runtime modules rather than competing with them. When robots need precise manipulation, OM1 utilizes Pi (Physical Intelligence) models. When multilingual speech recognition is needed, OM1 employs Meta’s Omnilingual ASR model. OM1 combines modules based on situations and delivers high scalability and flexibility.

OM1’s strengths extend further. OM1 fundamentally utilizes LLMs. Robots go beyond executing simple commands. They grasp situational context and make autonomous decisions.

Let’s examine a concrete example. Multiple objects sit in front of the robot. Someone requests “Pick up an item related to the desert.” Traditional robots fail because ‘desert items’ don’t exist in predefined rules. OM1 differs. It understands conceptual relationships through LLMs. It infers the connection between ‘desert’ and ‘cactus’ independently. It selects the cactus doll. OM1 establishes the foundation for robot collaboration and makes individual robots smarter.

3.2. FABRIC: A Network That Connects Distributed Robots as One

OM1 makes robots smarter and enables smooth communication between them. But beyond communication, a challenge remains. How can different robots trust each other when they cooperate? The system must verify who performed which tasks and whether they completed them properly.

Human society regulates behavior through law and guarantees performance through contracts. These mechanisms enable people to transact and cooperate safely with strangers. Robot ecosystems need identical mechanisms.

OpenMind solves this problem through ‘FABRIC’, a blockchain-based network. FABRIC connects robots and coordinates their cooperation.

Let’s examine FABRIC’s core structure. FABRIC starts by assigning an ‘identity’ to each robot. Every robot in the FABRIC network receives a unique identity based on ERC-7777 (Governance for Human Robot Societies)

Robots with assigned identities share their location, task status, and environmental information with the network in real-time. They simultaneously receive status updates from other robots. Like a situation board or minimap in a tycoon game, all robots track each other’s positions and status in real-time through one shared map.

Simply sharing information is not enough. Robots may submit incorrect information. Sensor errors may occur and distort data. FABRIC leverages blockchain’s consensus mechanism to guarantee data reliability.

Consider a real-world scenario. Delivery robot A cooperates with warehouse robot B to transport goods. Robot B reports it stands on the 2nd floor. Nearby sensor robots and elevator robots cross-verify B’s location. Multiple nodes verify transactions in blockchain. Multiple robots work the same way. They confirm B’s actual location and reach consensus. Suppose robot B reports the 2nd floor due to sensor error but actually stands on the 3rd floor. The verification process detects the discrepancy. The network records the corrected information. Robot A moves to the correct location on the 3rd floor.

FABRIC’s role extends beyond verification. FABRIC provides additional functions for the coming Machine Economy. First comes privacy protection. Blockchain transparency guarantees trust, but privacy also matters for operating actual robot ecosystems. FABRIC adopts a distributed structure that divides subnets by task or location and connects them through net hub servers. This structure protects sensitive information. The solution is not perfect, but continuous research will strengthen privacy protection.

FABRIC also provides Machine Settlement Protocol (MSP). MSP automates escrow, verification, and settlement. When the system verifies task completion, it automatically settles payment in stablecoins and records all evidence on the blockchain.Robots will evolve beyond cooperating with trust. They will become economic agents that transact autonomously.

4. What If: Future Daily Life Through OpenMind

4.1. A Whole New World: Utopia with Robots

We have long dreamed of a ‘Machine Economy’ where robots directly participate in economic activities. Robots judge independently, order goods, cooperate with other robots, and exchange value. OpenMind now transforms this dream into reality.

What kind of daily life can unfold? Watch OpenMind’s demo video. You ask the robot “Please buy me lunch.” The robot moves to the store, confirms the order, pays directly with cryptocurrency, and brings back the food. This appears simple on the surface but carries significant meaning. Robots no longer just execute commands in predefined environments. They transform into economic agents that judge and act independently.

The imagination expands further. Beyond transactions between people and robots, robot-to-robot transactions will emerge. For example, a household humanoid robot does housework and runs out of necessary supplies. It orders products from a nearby mart robot independently. Smart contracts generate automatically in this process. The mart robot delivers the product. The household robot confirms the goods and settles payment in stablecoins.

New forms of value exchange that never existed before will emerge. A delivery robot calculates the optimal route to its destination. It requests real-time data from traffic robots and pays a small fee in return. Even small daily cooperation becomes a transaction.

4.2. A Dangerous World: Dystopia with Robots

Robotics no longer belongs to science fiction movies. In China, consumers buy robot dogs (Unitree Go2) for about $1,000 and humanoid robots (Engine AI PM01) for about $12,000 Mass adoption accelerates rapidly.

Simply increasing robots in daily life does not matter most. Robot judgment capabilities remain limited. Safety is not yet secured. If a robot misperceives a situation and makes a dangerous decision, it causes direct harm to people. That harm could become a disaster, not just a simple accident.

OpenMind tackles this problem head-on. It assigns a unique identity to every robot through the ERC-7777 standard and uses this as a guardrail. For example, a robot dog receives the identity of “human friend and protector.” This identity prevents the robot from attacking or harming people. The robot always acts in a friendly and safe manner. The robot continuously confirms its identity and role and blocks inappropriate actions.

OpenMind goes further. They are working on a ‘Physical AI Safety Layer’ in collaboration with AIM Intelligence. This layer blocks robot hallucinations and defends against external intrusions and attacks. Consider an example. A robot tries to move while holding a sharp object. A child stands nearby. The system recognizes this as an ‘injury risk’ and immediately halts the action.

5. OpenMind: Building Tomorrow’s Robot Society

OpenMind moves beyond research stages. It prepares to drive substantial transformation in the robotics industry.

Founder Jan Liphardt, a former Stanford biophysics professor, stands at the center. He researched coordination and cooperation mechanisms between complex systems. He now designs structures where robots judge autonomously and collaborate. He leads overall technology development.

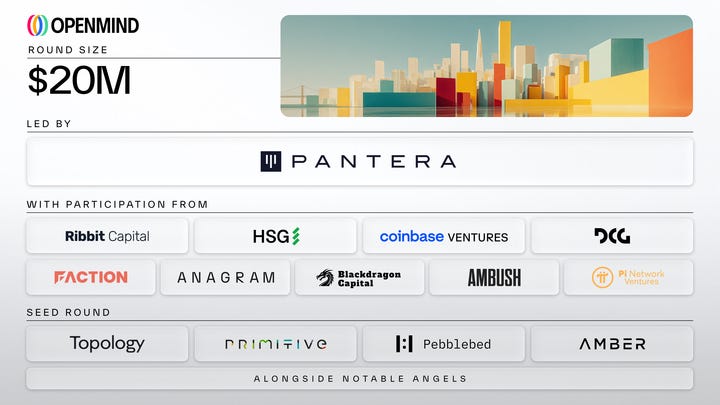

This technical leadership attracted $20 million in a funding round led by Pantera Capital. OpenMind establishes a financial foundation for technology development and ecosystem expansion. It secures the execution capability to realize its vision.

The market responds positively. Major hardware companies including Unitree, DEEP Robotics, Dobot, and UBTECH adopt OM1 as their core technology stack. The collaboration network expands rapidly.

However, challenges remain. The FABRIC network still undergoes preparation stages. Unlike digital environments, the physical world presents far more variables. Robots must operate in unpredictable real-world environments, not controlled labs. Complexity increases significantly.

Nevertheless, robot cooperation and safety require long-term solutions. We need to watch how OpenMind tackles this challenge and what role it plays in the robotics ecosystem.

🐯 More from Tiger Research

Read more reports related to this research.Disclaimer

This report was partially funded by OpenMind. It was independently produced by our researchers using credible sources. The findings, recommendations, and opinions are based on information available at publication time and may change without notice. We disclaim liability for any losses from using this report or its contents and do not warrant its accuracy or completeness. The information may differ from others’ views. This report is for informational purposes only and is not legal, business, investment, or tax advice. References to securities or digital assets are for illustration only, not investment advice or offers. This material is not intended for investors.

Terms of Usage

Tiger Research allows the fair use of its reports. ‘Fair use’ is a principle that broadly permits the use of specific content for public interest purposes, as long as it doesn’t harm the commercial value of the material. If the use aligns with the purpose of fair use, the reports can be utilized without prior permission. However, when citing Tiger Research’s reports, it is mandatory to 1) clearly state ‘Tiger Research’ as the source, 2) include the Tiger Research logo following brand guideline. If the material is to be restructured and published, separate negotiations are required. Unauthorized use of the reports may result in legal action.